Key Features

- LLM Support: Flexible architecture supporting cloud-based (Google Gemini, OpenRouter) and local (Ollama) language models

- Intelligent Query Generation: AI agent with tool-calling capabilities that autonomously retrieves database schema, validates queries, and executes SQL

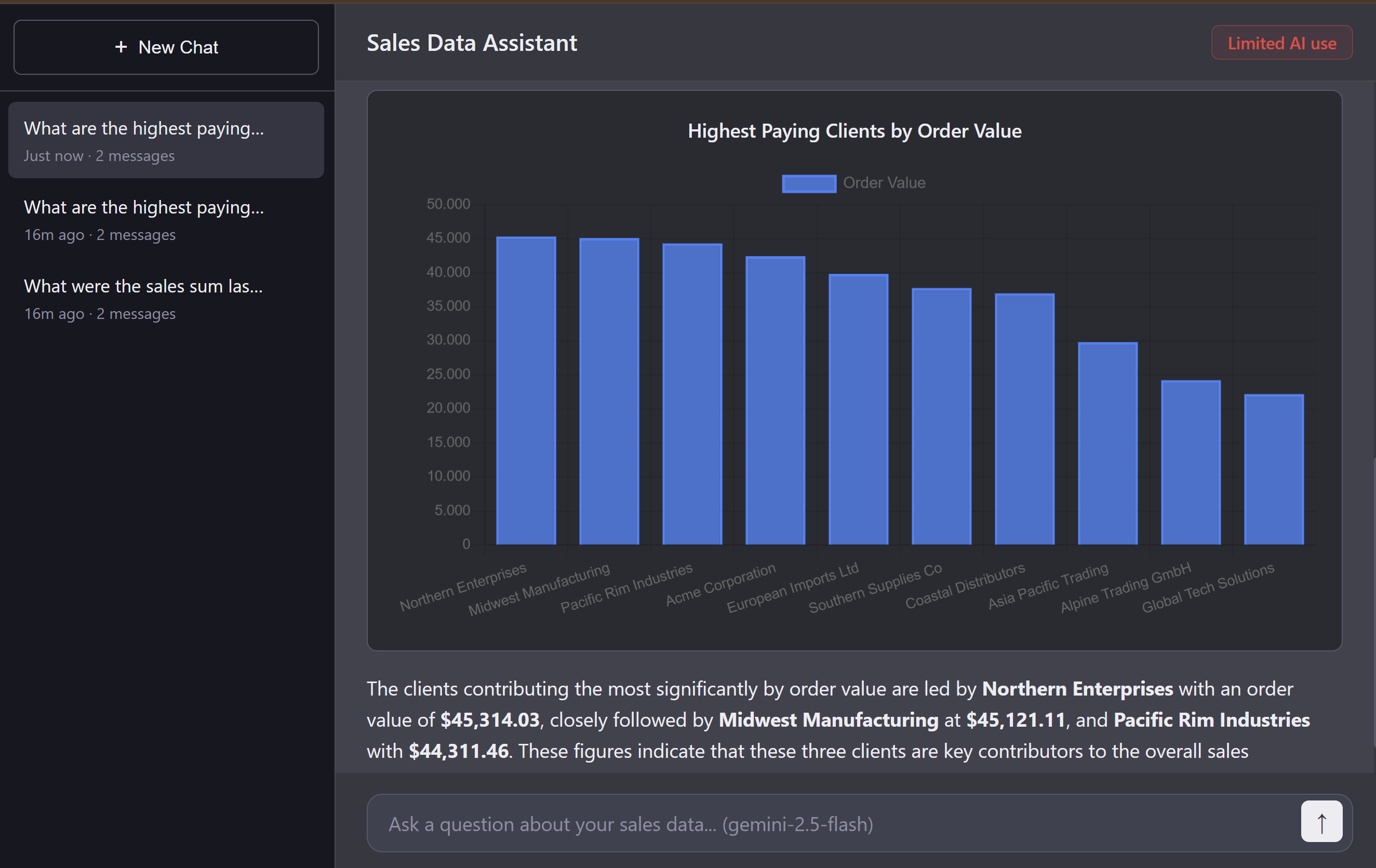

- Multi-Format Visualization: Dynamic rendering of results as tables, text summaries, and interactive charts (bar, line, pie, radar) using Chart.js

- Conversation Management: Persistent chat history with save/load/delete functionality stored in YAML format

- SQL Validation Layer: Server-side schema validation prevents injection attacks and ensures query integrity

- Responsive UI: Clean, modern interface with collapsible SQL query views and markdown rendering

Technical Architecture

- Backend: Python/Flask with SQL database(s)

- AI Integration: Custom LLM provider abstraction layer supporting multiple backends

- Tool System: MCP server for structured database interactions

- Frontend: Vanilla JavaScript with Fetch API, no heavy frameworks

- Security: Schema-based SQL validation, read-only query enforcement

Tech Stack

Python • Flask • Databases (MySQL, SQLite, ...) • Google Gemini API • Ollama • Chart.js • YAML • MCP

Use Cases

Teams can query complex business metrics using plain English: "What are the top 5 customers by order value?" or "Show me sales trends for last quarter" — the AI handles schema discovery, query generation, execution, and presents results with contextual analysis.